Let’s say, you just installed two NVMe drives. That means, you currently have the following devices on your system:

/dev/nvme0n1 /dev/nvme0n2

Now, to use Raid 1 on these devices, you need to first partition them. If your devices are less than 2TB, you can use label msdos with fdisk. But I prefer gpt with parted. I will partition the disks using parted.

Open the disk nvme0n1 using parted

parted /dev/nvme0n1

Now, set the label to gpt

mklabel gpt

Now, create the primary partition

mkpart primary 0TB 1.9TB

Assuming 1.9TB is the size of your drive.

Run the above process for nvme1n1 as well. This will create one partition on each device which would be like the following:

/dev/nvme0n1p1 /dev/nvme1n1p1

Now, you may create the raid, using mdadm command as follows:

mdadm --create /dev/md201 --level=mirror --raid-devices=2 /dev/nvme0n1p1 /dev/nvme1n1p1

If you see, mdadm command not found, then you can install mdadm using the following:

yum install mdadm -y

Once done, you may now see your raid using the following command:

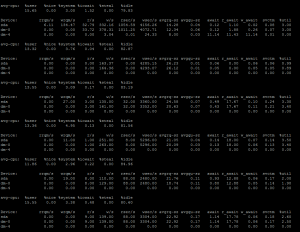

[root@bd3 ~]# cat /proc/mdstat

Personalities : [raid1]

md301 : active raid1 sdd1[1] sdc1[0]

976628736 blocks super 1.2 [2/2] [UU]

bitmap: 0/8 pages [0KB], 65536KB chunk

md201 : active raid1 nvme1n1p1[1] nvme0n1p1[0]

1875240960 blocks super 1.2 [2/2] [UU]

bitmap: 2/14 pages [8KB], 65536KB chunk

md124 : active raid1 sda5[0] sdb5[1]

1843209216 blocks super 1.2 [2/2] [UU]

bitmap: 4/14 pages [16KB], 65536KB chunk

md125 : active raid1 sda2[0] sdb2[1]

4193280 blocks super 1.2 [2/2] [UU]

md126 : active raid1 sdb3[1] sda3[0]

1047552 blocks super 1.2 [2/2] [UU]

bitmap: 0/1 pages [0KB], 65536KB chunk

md127 : active raid1 sda1[0] sdb1[1]

104856576 blocks super 1.2 [2/2] [UU]

bitmap: 1/1 pages [4KB], 65536KB chunk

unused devices: <none>

Here are a few key pieces of information about software raid:

- It is better not to use Raid 10 with software raid. In case the raid configuration is lost, it is hard to know which drives were set as stripe and which like a mirror by the mdadm. It is a better practice to use raid 1 as a rule of thumb with software raid.

- Raid 1 in mdadm doubles the read request in parallel. In raid 1, one request reads from one device, while the other request in parallel would read from the next device. This gives double read throughput when there is a parallel thread running. It still suffers from the write cost for writing data in two devices.